Security & Compliance

🤗 Inference Endpoints is built with security and secure inference at its core. Below you can find an overview of the security measures we have in place.

Data Security/Privacy

Model Database does not store any customer data in terms of payloads or tokens that are passed to the Inference Endpoint. We are storing logs for 30 days. Every Inference Endpoints uses TLS/SSL to encrypt the data in transit.

We also recommend using AWS or Azure Private Link for organizations. This allows you to access your Inference Endpoint through a private connection, without exposing it to the internet.

Model Database also offers Business Associate Addendum or GDPR data processing agreement through the Inference Endpoint enterprise plan.

Model Security/Privacy:

You can set a model repository as private if you do not want to publicly expose it. Model Database does not own any model or data you upload to the Model Database hub. Model Database does provide malware and pickle scans over the contents of the model repository as with all items in the Hub.

Inference Endpoints and Hub Security

The Model Database Hub, which Inference Endpoints is part, is also SOC2 Type 2 certified. The Model Database Hub offers Role Based Access Control. For more on hub security: https://huggingface.co/docs/hub/security

Inference Endpoint Security level

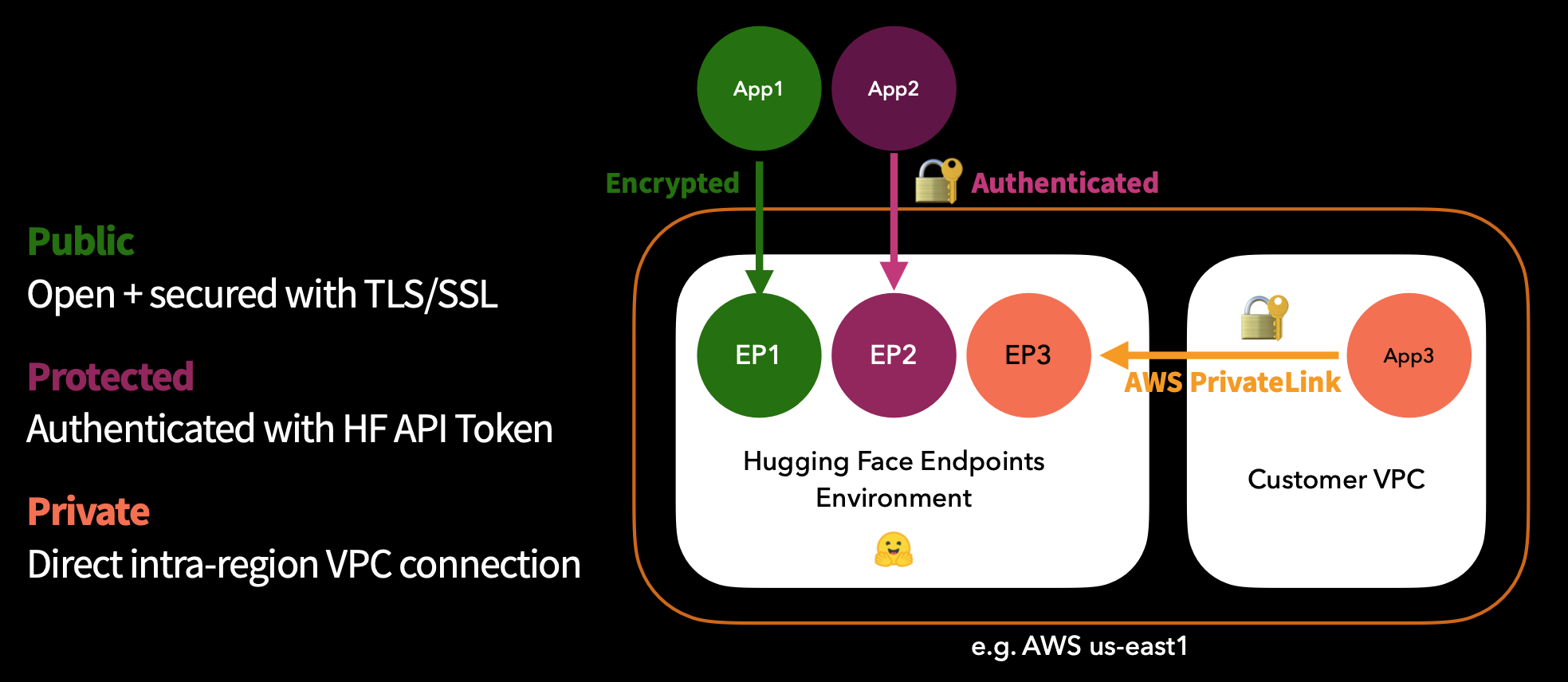

We currently offer three types of endpoints, in order or increasing security level:

- Public: A Public Endpoint is available from the internet, secured with TLS/SSL, and requires no authentication.

- Protected: A Protected Endpoint is available from the internet, secured with TLS/SSL, and requires a valid Model Database token for authentication.

- Private A Private Endpoint is only available through an intra-region secured AWS or Azure PrivateLink connection. Private Endpoints are not accessible from the internet.

Public and Protected Endpoints do not require any additional configuration. For Private Endpoints, you need to provide the AWS account ID of the account which also should have access to 🤗 Inference Endpoints.

Model Database Privacy Policy - https://huggingface.co/privacy