Overview

Language model: gelectra-large-germanquad

Language: German

Training data: GermanQuAD train set (~ 12MB)

Eval data: GermanQuAD test set (~ 5MB)

Infrastructure: 1x V100 GPU

Published: Apr 21st, 2021

Details

- We trained a German question answering model with a gelectra-large model as its basis.

- The dataset is GermanQuAD, a new, German language dataset, which we hand-annotated and published online.

- The training dataset is one-way annotated and contains 11518 questions and 11518 answers, while the test dataset is three-way annotated so that there are 2204 questions and with 2204·3−76 = 6536 answers, because we removed 76 wrong answers.

See https://deepset.ai/germanquad for more details and dataset download in SQuAD format.

Hyperparameters

batch_size = 24

n_epochs = 2

max_seq_len = 384

learning_rate = 3e-5

lr_schedule = LinearWarmup

embeds_dropout_prob = 0.1

Performance

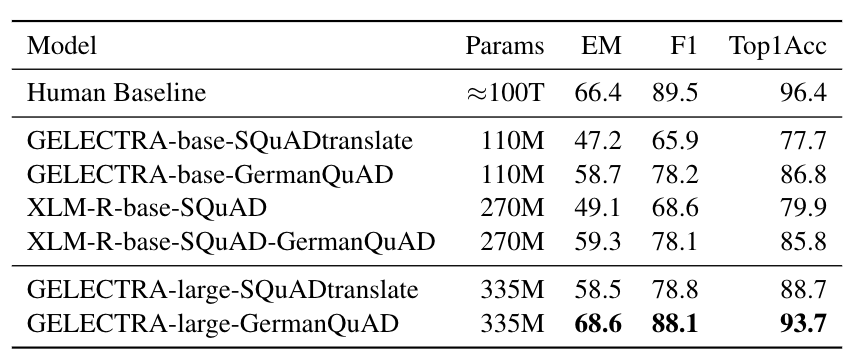

We evaluated the extractive question answering performance on our GermanQuAD test set.

Model types and training data are included in the model name.

For finetuning XLM-Roberta, we use the English SQuAD v2.0 dataset.

The GELECTRA models are warm started on the German translation of SQuAD v1.1 and finetuned on GermanQuAD.

The human baseline was computed for the 3-way test set by taking one answer as prediction and the other two as ground truth.

Authors

Timo Möller: [email protected]

Julian Risch: [email protected]

Malte Pietsch: [email protected]

About us

deepset is the company behind the open-source NLP framework Haystack which is designed to help you build production ready NLP systems that use: Question answering, summarization, ranking etc.

Some of our other work:

- Distilled roberta-base-squad2 (aka "tinyroberta-squad2")

- German BERT (aka "bert-base-german-cased")

- GermanQuAD and GermanDPR datasets and models (aka "gelectra-base-germanquad", "gbert-base-germandpr")

Get in touch and join the Haystack community

For more info on Haystack, visit our GitHub repo and Documentation.

We also have a Discord community open to everyone!

Twitter | LinkedIn | Discord | GitHub Discussions | Website

By the way: we're hiring!

- Downloads last month

- 14,129